|

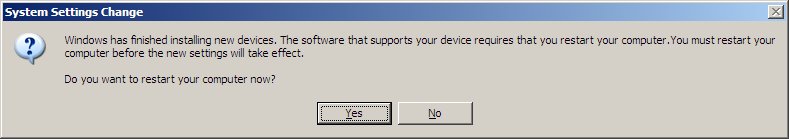

Typical reboot prompt

for Windows updates, this one mandatory so the user must stop all

work and reboot before the counter runs out. Seriously? |

Open Source Software vs. Commercial Software:

Migration from Windows to Linux

An IT Professional's Testimonial

Maintenance Headache of Windows

A Note on Reliability

In a typical company with numerous computers, the best practice for installing updates is to first download and install them in a test environment that closely resembles the environment used every day. This sets up an ideal system for testing updates first, before deploying them to real used systems. This process greatly decreases the chances of downtime due to bad or updates that break things. However, the more frequent and numerous the updates, the more work this requires. It is a fine balancing act of letting updates pile up and deciding when to test and deploy them, when they are released on average of once per week. In Linux, updates are not released in batches, they are released immediately as they are developed. So, instead of installing updates every time a new one is released, they should probably be installed in batches to simulate the Microsoft method. However, you will probably find that the Linux updates are generally released for fixing glitches instead of major security breaches. Therefore, they can usually be deployed less frequently than updates released by Microsoft. Again, this varies greatly.

Every week, Windows users are urged to apply all of the updates on their computers. Granted, they almost always fix the problem. But once in a while, an update will break something else though. Everybody that uses Windows has probably witnessed this or has heard of this happening. Why is this? My only thought is again because of the limited number of developers at Microsoft. On a well tuned Linux computer, you are actually more secure than you would be with Windows; it doesn't seem to have as many security problems or bugs, and if some are found they are fixed very quickly. With closed source software such as Windows, you can risk using software that is vulnerable to a malicious attack, while waiting for a patch to be released to fix the problem. I pointed this out earlier with the Internet Explorer vulnerability (KB961051) where Microsoft released a bulletin acknowledging a security issue, but two days later still had no patch or fix for it, leaving its customers with unsecure software while it scrambles to find a fix and release a patch. But, back to Linux, even better the updates can usually be installed without a reboot. Without a reboot, seriously? Yes. In fact, most software in Linux can be installed without rebooting. This is because Linux is very modular, or made up of many components that all work together. The Linux kernel (the main executable of Linux), can stay running in memory while you fine tune other software and settings. We all know how many times we need to reboot Windows when installing patches or updates, pretty much with every single one. This is not a big deal for a regular home PC that is probably turned off at night anyway. But, what about a critical server that is answering requests for business critical applications? The reboot may be quick, but it is downtime. I don't know about you, but I would prefer my servers to stay running as much as possible.

Linux is designed to stay up and running, and this is apparent in everyday usage of simply using it. Not only is the kernel very modular in design as I just mentioned, but other things like system services and processes can be paused or reloaded instead of completely restarted and disconnecting users. This is one thing that Windows flat out lacks. Windows system services have 3 options: start, stop, and restart. However in Linux, you have additional options for most services: start, stop, restart, and reload. The reload option is very useful and is usually used for reloading the configuration for a service without actually restarting it so that no interruptions take place. This is just plain smart, especially in server environments. So, in order to restart the service, an after-hours maintenance period would probably have to be scheduled for a Windows server. Where with Linux and the option of reloading, maintenance can be done during the day while users are connected and utilizing the service.

|

Some may argue that two servers could be set up in a cluster, so when one reboots the other server would stay up and continue to answer requests from users that need to connect to it. But, this would require two servers, and would therefore double the cost for hardware and software, so this is not a viable option for those trying to keep costs down. And in most cases when the servers reboot, somebody needs to check on them and make sure they come back up correctly. This can prove to be very inconvenient especially for servers that are scheduled to reboot in the middle of the night. And what about desktop PCs? Well, this isn't as critical as it only affects one user that is currently using the PC. But, some Windows updates actually make it mandatory that the PC be rebooted right away! Am I serious? Yes, this is definitely true. Now, Windows does offer several options where you can select to download all updates automatically then prompt to install them. But the default setting is to have Windows download and install the updates automatically. In theory this sounds great, the user won't have to worry about anything and all of the updates will be automatically installed in the background, right? Not quite so. Unfortunately Windows is not as efficient and cannot apply updates without restarting. See the example on the left with Windows XP where the user is prompted to reboot and is given no option but to stop all work and close applications within 5 minutes or their system will force a reboot itself! Notice that the "Restart later" button is grayed out!

Not only does Windows need to be restarted for most updates released by Microsoft, but even new versions of Windows such as Windows XP still has a Windows 98 feel where it requires a reboot to simply add, install, or enable a hardware device. With Linux, a reboot is almost never needed, as a module for the device that is needed is simply inserted on the fly, and the device is instantly activated. This is true for even the most complex devices, since every device is either supported directly by the Linux kernel or is compiled as a kernel module (which is inserted on the fly).

|

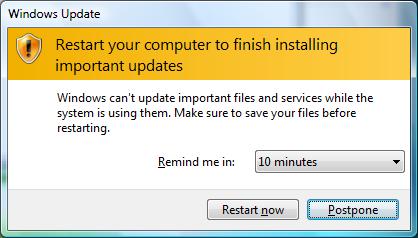

Typical reboot prompt for Windows

XP after installing a simple USB modem device. |

And what's even more disconcerting is that even the latest and greatest version of Windows such as Windows Vista still requires frequent rebooting just to install minor patches from Microsoft. Again, Linux does not need to be restarted after installing software patches since they are completely separate from the Linux kernel which is always running. It is still unknown to the exact reason Windows needs to be restarted even after the installation of the simplest of patches. The screenshot at the right is the message that Vista users get when the patches have been downloaded but not completely installed until a reboot is done.

|

Typical reboot prompt for Windows

Vista updates and patches. |

In Linux you can have your system automatically update itself (Red Hat Network daemon, or Yum in Fedora), but usually I review and if necessary install the update packages manually. Manually installing the updates is fine for a home environment. In a corporate or enterprise environment, there are other packages that will allow deployment of updates. Nothing is perfect, and even in Linux after an upgrade, it might need some attention or settings checked. This is rare, but sometimes necessary. Luckily, security issues with Linux only come across on occasion, as opposed to Windows where they are a regular occurence.

As mentioned already, Linux seldom requires a reboot when installing updates. This can keep server systems that use Linux running for quite a long time. It is definitely not unheard of to have a Linux server running for 1, 2, or more years without a reboot. Almost every time that I have had to reboot a Linux server was related to a long power outage where the battery backup unit simply ran out of power and couldn't continue running the servers. Therefore, I have personally witnessed many Linux servers running for well over a year without a reboot. In fact, recently a company called "Ksplice" [1], has come up with a solution for Linux called that allows kernel patching to take place in memory, allowing the kernel to be patched without a reboot! This means that you could have Linux machine running indefinitely, provided that it had reliable power backup incase of a power failure.

In the world of Windows, having a server running this long is esentially unheard of. Why is this? For some reason as I have already mentioned, most Microsoft patches require a reboot. I honestly do not know the exact reason for this, but I can only speculate that either Windows just isn't modular or efficient enough so that one component of it can be updated and rebooted without affecting something else. Either that, or it's just too quirky to rely on unless the whole server is rebooted, to ensure the updates are installed correctly. Whatever the reason is, it is rare to have a Windows server up for more than 30 days straight, since Microsoft releases major updates every 30 days, and sometimes even more frequently than that if they are critical enough. It is good to know that updates are being released frequently, however the part about requiring the server to be rebooted is not such as good. This can cause frustrated users that are cut off from services that the server provides.

On top of Microsoft patches to Windows computers, there are also numerous cases where software installations will conflict with each other, which requires additional rebooting as well. Often times, two 3rd party products will conflict, requiring Windows to be rebooted before the next product can be installed. This is demonstrated in the screenshot below for Symantec Endpoint Protection. In this case, some sort of previous installation is pending, so the computer needs to be rebooted before the Symantec Endpoint Protection software can be installed. The error below is being reported by the Microsoft Installer itself.

|

A reboot prompt for Symantec

Endpoint Protection antivirus software, that requires a reboot from another

installed product, in Windows XP. |

On the contrary, as I have mentioned before, Fedora and Red Hat Linux use a software installation mechanism called RPM (Red Hat Package Manager). The RPM system is extremely efficient. And, it never requires a reboot unless you are installing an update to the kernel package itself. For every other piece of software, packages can be updated and put to work instantly. This is a huge contrast to the Microsoft Installer which not only can require reboots, but can conflict between different packages.

|

OK, so we've acknowledged that rebooting is a nuissance, especially with servers. Not only to systems administrators need to monitor the servers after the reboots, but they also need to keep an eye on the patches that were also installed from the reboots. Patching Windows or Linux keep the same concept, that software is being updated or upgraded and it can have a negative impact on the server or computer. Patches can break things, and this is something that can happen with any operating system. So when you look and Windows and Linux, both need to be patched, so I will not focus on that point. I would however like to touch on the rebooting process, especially on servers, and the monitoring and cleanup that is necessary when rebooting Windows servers. Normally, the rebooting must be done after hours so as not to interrupt normal operations. Since Linux does not have to be rebooted, it can normally be patched during the day, sometimes even while users are using it. But, patching Windows after hours is no fun. This takes time away from systems administrators that are doing the patching, in most cases personal time in the evening, to monitor the server reboots. Then, there's the cleanup afterwards. Wait, what do I mean by cleanup, how can that be caused by a simple reboot? Well, in most enterprise environments, servers are closely monitored for failures. In this world, a simple reboot can throw up red flags as the server is temporarily unavailable during this period. Some hardware/software monitoring products will account for this, and some do not. I will focus on Microsoft's own monitoring system, the Microsoft Operations Manager (or MOM). MOM is an extensive piece of software that will monitor all aspects of Windows servers, hardware and software alike. It is a good product, I admin, and does what it is designed to do. But, there is one flaw with it. Microsoft forgot to put in any functionality to handle the server rebooting for updates. So, you can have your server patch itself, and reboot itself, but MOM will complain extensively as soon as the server reboots. This typically generates a whole slew of errors, leaving the system admin to sort through them one by one, weeding out any potential problems from those just caused by the reboot. This a tedious and very time consuming task, and must take place every time the server reboots automatically. A workaround to this problem is to put the server in maintenance mode so that no alerts are generated, but this is a manual process as well. So either way, the system admin must manually take some sort of steps.

Microsoft is very keen on rebooting. Even some errors in MOM suggest rebooting as a solution to the problem, as demonstrated in the screenshot below. This is actually Microsoft's own solution! This screenshot is a memory error that seems to be common in Windows. I have seen it happen in both virtual machines as well as physical machines of different types, so I believe it is a software problem of some sort. As you can see, the resolution says to reboot in order to fix it.

|

One thing that is fun to do is check to see what systems are used for some of your favorite websites, as well as their total uptimes (time since the last reboot). For instance, the Netcraft website has a neat script that will check to see what software is running any website that you choose. It also tries to query the server to get the uptime (time since the last reboot). So, go ahead and query your favorite websites and see what software they use, and how long they have been up since a reboot. One thing that I noticed right away when I checked sites that use Microsoft IIS is that they do not post their uptimes even though Windows supports this query. Even Microsoft itself, has the server set so that it doesn't report an uptime even though it could report that value if it was enabled on the server. My only guess is that they are embarrassed to display a short uptime. In my findings I did see a couple of servers running Microsoft IIS that had been up for quite some time, but I can only imagine how vulnerable they would be from a security standpoint as they would not have any security patches applied since the last reboot! Luckily Microsoft has taken the time to install enough servers in a cluster to ensure that the end users don't see any down servers. This may be OK for a large company like Microsoft that has enough money to invest in enough hardware to accomplish this, but for smaller companies this might be a waste or even outside of the budget to purchase more hardware all because Windows needs to be rebooted so often. A company would be better off purchasing more hardware to guard against a catasrophic hardware failure, not because the operating system needs to reboot itself often. Spend some time on the Netcraft site above for some fun on checking out various web servers around the Internet, and see what kind of results you find. I will go into much more detail later on the hidden costs of this extra hardware and downtime with Microsoft Windows.

|

||

|

Anomalies Galore

I think we've all seen our Windows PCs lock up, reboot automatically, throw out strange errors that are unexplained, blue screen, etc. But why does this happen time after time again? It's happened as far back as I can remember, with Windows 95 all the way up to Windows Vista, and on known good hardware. And to boot, there are problems that have been around for years in previous versions of Windows, that are still there in the latest patched version of Windows XP! Is it simply that Microsoft doesn't care to fix problems that exist, or cannot it not devote the resources in getting them fixed? This question will probably go unanswered indefinitely, as I don't picture Microsoft being openly supportive on an answer to these questions. And it's not like any developer out there can just pick up the source code and fix the problem like the open source community, so problems can linger until Microsoft gets around to fixing them.

Fortunately in the open source world of Linux there is really no such thing as limitations because of the vast depth of the open development community, so problems like this are fixed and do NOT get strung out over years of time. But I think we have all become accustomed to this type of behavior in Windows, and therefore we just expect that this is normal. But is it really? The answer should be no, it should not happen very frequently. Probably the most noticeable problems like this that I have seen lately are NTFS filesystem errors on servers (Windows 2003 Server) and desktop PCs (Windows XP), that cause the system itself to completely crash and data to be lost. NTFS is Microsoft's filesystem used in all its server operating systems (Windows Server 2000, 2003 and 2008) and desktop operating systems as well (Windows 2000, XP and Vista). It has a history in the server arena because it can handle large files and has good performance, etc. Unfortunately, NTFS is at the center of the hard disk I/O because it is what organizes files on you disk. Time and time again I have seen this filesystem totally get corrupted for no rhyme or reason, leaving the server or desktop PC totally useless. Not only does this prevent the system from booting, but is very painful, time consuming, and can be VERY expensive if you need to retain the data stored on the disk for any reason. From large servers hosting hundreds of mailboxes, to simple desktop PCs, I have seen NTFS fail time and time again, when there were no hardware problems. I have reinstalled everything over the same hardware and it would continue to run just fine for quite some time, telling me the problem was caused by pure software, at no fault of the hardware. Unfortunately, these types of anomalies can cause extreme amounts of downtime when the system crashes. Basically, the time to reinstall the operating system back to the state it was before the crash. Fortunately, we have tools such as Norton Ghost and others that can back up the entire C partition and restore it if the filesystem gets totally hosed. But, I don't think this should happen to begin with, if the hardware is working as it should.

Luckily, the filesystems used in Linux are extremely stable. In my 11 years of experience, I have not once seen a Linux system become totally unstable and not boot, because of a strange anomaly like the filesystem becoming corrupt on its own. Also, the need to defragment is pretty much non-existent. Most of those that use Windows are probably used to running the defragment utility often to "optimize" performance. It's almost like your house, things need to be cleaned up once in a while to keep things in ship shape. But, does it have to be this way? No so with Linux. To date, I have never even needed to run a defragment process on any of the filesystems that Linux uses. I have mainly used the ext2, ext3, and XFS filesystems, with very pleasing results. XFS has been my favorite since its early years of being ported over to Linux, around 2000 is when I started using it. There is practically no file size limit, it is very efficient at storing lots of small files, and it is a journaling filesystem (changes written to disk are kept in a log so that no data is lost if the kernel cannot write to the disk such as the disk going offline or spinning down). I have put the XFS filesystem through stress tests over years and years of use, and am happy to say that I have had servers using it running for well over a year without a reboot. I have never witnessed any sort of file corruption in any way shape or form. In fact, the only times that the servers were rebooted were due to power failures. In the Windows world, this is plain unheard of. I have screenshots of two of these servers as an example of the uptime, note the total uptime in days. One of these is a web server running Apache and the other a mail server running Sendmail. These servers were eventually rebooted because of a widespread power outage that knocked out power for 2 days, and our battery backup ran out of power to run the servers any longer.

A Time Bomb?

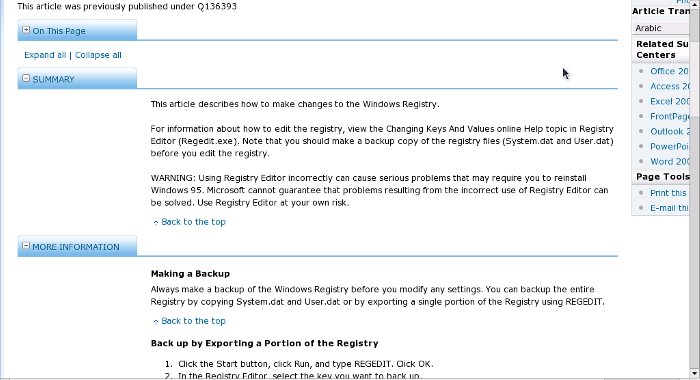

Just today I received an article in my email inbox for my regular CNet newsletter describing faults with the Windows registry, focusing on problems that can be caused by the registry becoming "corrupted". Its focus was on why CD and DVD drives disappear in Windows. It's filled with a list of other issues and some links of possible solutions. Which brings up a pretty good subject: the Windows registry. The purpose of the Windows registry is pretty straightforward, to house all of Windows' settings. It is a nice convenient way to keep everything in one place, that's for sure. So, what is all of the fuss about issues and corruption? Well, the whole problem with the registry is that just about every program and service that runs will need to access the registry for something or another. Because of this, the registry is VERY fragile and must be modified with great caution, since many things depend on it. This makes sense as critical settings are housed here, and one slip can cause some serious damage. Which is probably why any article posted by Microsoft for a fix within the registry has disclaimers all over about the danger modifying the registry. There are countless articles posted on Microsoft's website that contain solutions to problems, and direct the user to modify the registry, yet a few lines down exclaim how dangerous it can be to modify the registry and that Microsoft does not guarantee any results from doing the modification that they are suggesting be done! One could say this is a little controversial, however I don't think Microsoft is going to try writing a patch for each and every fix that is encountered. It's much easier to post the change that must be done to the registry instead.

Let's face it, the registry is basically a single point of failure in Windows. Luckily, Linux has a much simpler design and does not have a single point of failure like the registry. Linux has a variety of ways that programs and the kernel keep its settings. Often times, settings are stored in plain text files on the hard drive. Sometimes they are stored in the "proc filesystem" (a virtual filesystem located as /proc on the hard disk). Either way, things are kept simple and it's pretty much impossible to modify any of these files and create a system that won't boot. There must be a reason that Microsoft puts up all of the warnings, and this is probably because the registry is a single point of failure and is very fragile. Having the core of the operating system this fragile doesn't seem like a great recipe for a stable operating system.

|

A Microsoft posted article

about how to edt the registry, advising of the danger in doing so. |

A Touch on Stability

I would like to touch on the issue of stability as I started to get into already. One thing over the years of using Linux and Windows side by side is that Windows has never been nearly as stable as Linux. This is not just my observation, either. Doing some quick Google or Yahoo searches on this subject will yield some interesting results to back up this statement. It is comical to me that administrators of Windows machines will run across crashes, and usually the fix is to reboot and continue on the way and not wonder what happened. It's working after a reboot, why spend the time and try to figure out what went wrong or caused the crash? This is the mentality of administrators of Windows machines because it is the norm in that environment. But, if you step back and consider that Linux does not behave like this, and Linux crashes are usually caused by faulty hardware or badly programmed applications, a different mentality should be obtained. In 11 years of administration of Linux machines, I have only witnessed one crash where the server completely froze and caused what is known as a "kernel panic". Because this type of a crash is such a rare instance, I was able to dig in and discover the issue and determine a solution. In this case, the stock kernel provided with Red Hat 7.1 for the DEC Alpha architecture (this is NOT the same as Intel architecture ) was compiled incorrectly. This was definitely not an easy task to locate and fix the problem by any means, but there WAS a fix. Luckily, we were able to recompile the kernel and the server was up and running, rock solid just like any other. But back in the world of Windows, crashes and the blue screen of death or BSOD still occur even in the latest Windows Server 2008 with all of the latest patches. To this day I still see this happen in Microsoft environments quite frequently. My theory of this is because of the structure of Windows and its multiple points of failure. And as this subject continues, I reiterate the point that this causes confusion to users, costs companies and individuals for downtime, and can lead to further problems and data corruption which can cause a chain reaction of problems and further costs. This should not be the way to run systems of a business and should not be accepted as normal operation.

A Closer Look at Windows Anomalies

Unfortunately, having a vast number anomalies almost makes things seem more fragile knowing that something could suddenly break at any time. This can simply not be avoided in the world of computers and servers, where things are always bound to go wrong. This is just what we call Murhpy's Law, which states that if there's any chance that something go wrong, it will. Murphy's Law is applied to the world of technology all of the time. But, by decreasing your chances of having to deal with these problems seems to be a logical move to me. Yes, hardware will fail. But with modern servers, hardware is now redundant to where if a component fails, the server can stay up and running, often times while the technician makes a repair to it. But, this only goes so far as the operating system. A server's components can be completely redundant, but if the operating system fails, the redundancy is no good and the server becomes inoperable. I have seen many times where servers that have mutiple redundancies with their hardware have crashed because of Windows problems. The anomalies with Microsoft products have been so great I have compiled a list of ones that I have encountered on multiple occasions, over multiple years and over different versions of Windows, where I have ruled out the problem to being caused by faulty hardware. Especially since these very same problems reoccurred in different environments on completely different hardware, and at different times. To me, some of these should just not be tolerated in a production or business critical environment.

Top 10 Windows Anomalies

|

Massive Outages with Massive Usage

As mentioned already, one of Windows' biggest issues is that it is a constant target of viruses, worms, and malware in general. While this may not seem to be the entire fault of Windows in that it has a major market share and hence it is the target in most cases, it can have disastrous consequences on a massive scale if organizations and entities use only Windows in their environment. As some would say, "having all of your eggs in one basket". Diversifying is good for a lot of things, such as investing for example. It doesn't make sense to put all of your money in to one company or stock, as if that stock or company goes belly up, there goes your money. Instead, it's better to invest in several stocks together so that if something bad happens to one of them, only a portion of your money is affected. The same can be applied to IT as well. It is not a good idea to invest everything in to one operating system, especially one that is prone to security problems like Windows. And, I would not consider Linux itself a single operating system since it contains hundreds of different configurations (or distributions) because it is truly open source (you can have a full featured computer with Linux, or a very basic stripped down computer with only a couple of services running on it). Windows itself only has a couple variations, and whether Windows is running on computers or servers, they still run the same OS kernel with similar services. I've touched on this before, because simply logging in to a Windows Server will show a lot of extra junk that should only ever be installed on a computer and never a server. But, Microsoft doesn't seem to have any intentions of having a truly separate server OS which only contains services for a server and not a standard computer. And yes, Microsoft does market its Windows OS separately (for example Windows 11 or Windows Server 2019), but under the covers a lot of the same services are installed on both by default.

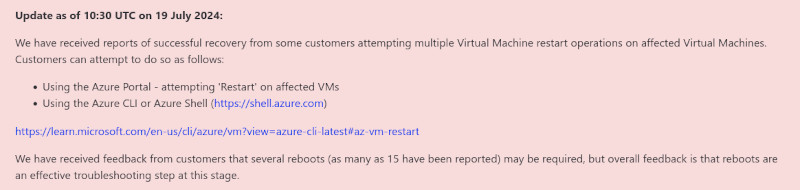

Companies that solely use Windows have experienced massive outages primarly due to security issues. Most of the time, it is due to Microsoft releasing a bad update or one that causes massive conflict with another piece of software, as described previously. Other times it can be due to a third party product like the event on July 19, 2024 where massive IT outages occurred worldwide due to a security product called "Crowdstrike" that runs on Windows operating systems to cause massive scale PC and server crashing with the famous Windows blue screen of death (BSOD). IT organizations across the globe scrambled to reboot and remediate PCs and servers as quickly as possible however the impact of this outage was very widespread and felt throughout. Meanwhile, those systems on Linux, as in a lot of cases, were not affected. Sure, Linux has had issues with software as well on a massive scale, too, but they are much less frequent.

One core issue of Windows, since it is the target of security vulnerabilities, is that it must run security software on it 24/7, which takes up resources and overhead. And yes, Linux has and should have security software running on it too, but it is very efficient and not even nearly as bloated as those that run on Windows, mostly because the number of security issues with Linux is far less than Windows to begin with. And, when a security product like Crowdstrike releases an update for its software that causes Windows to crash as in the event on July 19, bad things will happen all over, and in this case affected industries all over the globe including airlines and airports, payroll systems, and thousands of other businesses and organizations. What is even more comical, is Microsoft itself releasing a statement that rebooting the PC or server up to 15 times can resolve this issue. Say what??! I have already addressed the rebooting problem of Windows above which is one thing, but a 15-time reboot suggestion from the software vendor (Microsoft) is a new one for me. What type of IT organization has the resources to reboot each and every one of its computers and servers 15 times each?!

|

A Microsoft posted statment

from the Azure Global

Status page on July 19, 2024, mentioning a 15 time reboot fix for

its Windows operating system. |

This type of reliability should not be tolerated by any critical service, period. This should be a learning lesson for all corporations or organizations affected.

Sure, Linux has had its issues as well but not a failure to this scale. And yes, there are a lot of times where certain software is only available on Windows because it has the dominant market share. However, in those cases, a better solution such as using Linux as the core operating system and an application emulation layer such as Wine should be considered. This gives the best of both worlds where the Windows application can be used, but the operating system running it is Linux and is not affected by 3rd party software failures such as Crowdstrike. Wine has been mentioned in various places in this article, but this page has the full detail.

Next Section : Maintenance Headache of Windows:Troubleshooting

![]()

![]() Previous Section: Maintenance

Headache of Windows:Staying Secure,Virus Outbreaks,Malicious Software,Elevated

Rights Model

Previous Section: Maintenance

Headache of Windows:Staying Secure,Virus Outbreaks,Malicious Software,Elevated

Rights Model

Click Here to Continue reading on making the actual migration.

References

1. Ksplice : Never Reboot Linux